I am attending the session AI in Law: From Theory to Practice, live at ILTACon 2017. The International Legal Technology Association annual conference is the preeminent global legal tech conference.

The moderator is Andrew Arruda (CEO, ROSS Intelligence). Panelsts are Jonathan Talbot (Director, IT Enterprise Systems DLA Piper), Julian Tsisin (Legal Technology and Systems Google Inc. Legal Department), Anna Vanderwerff Moca (Senior Manager, Strategic Projects White & Case), and Amy Monaghan (Practice Innovations Manager Perkins Coie).

As with all my live blog posts, I publish as a session ends. So please forgive any typos or errors in my understanding of what speakers say.

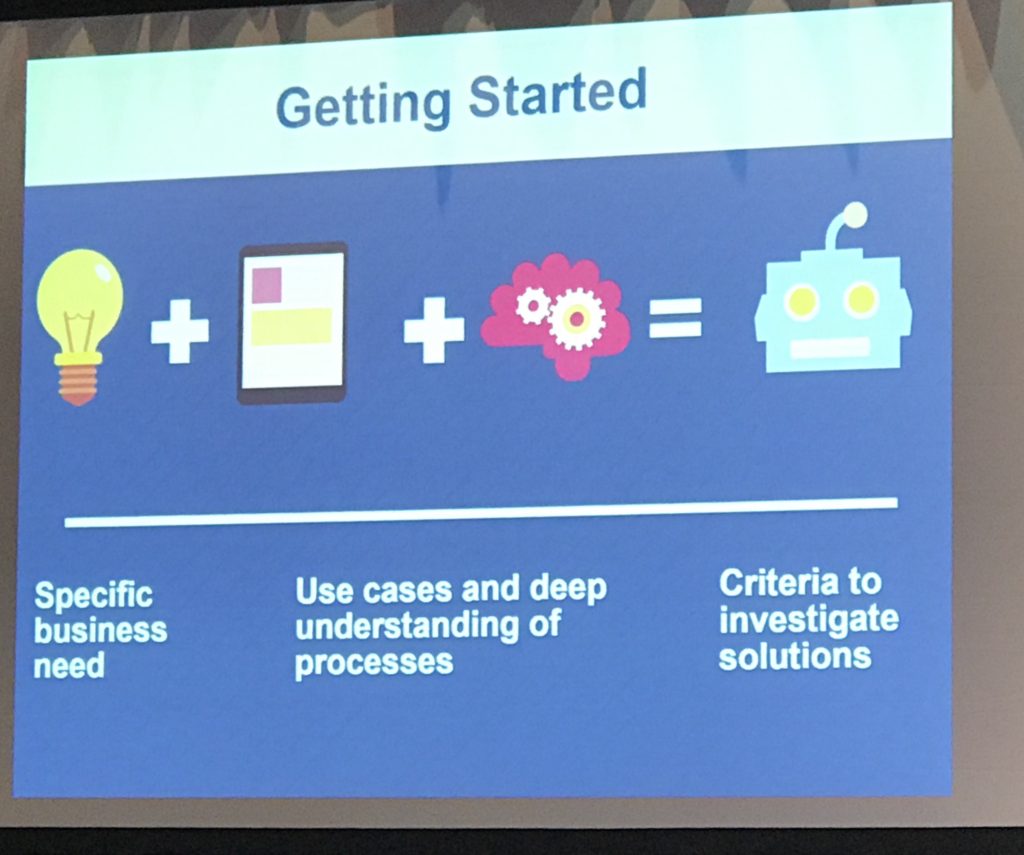

Getting Started with AI.

Jonathan / DLA: DLA clients said due diligence was taking too long and cost too much. So the firm created a team for process mapping, standardizing documents, and checklists. This yielded 10 to 15% savings but wanted to go further. So firm looked for products and selected Kira to solve diligence use case. The theme is understanding the problem, then looking for a solution. It’s not about the tech itself.

Anna / W&C: For us, getting started was more about communicating and investigation. We use our Intranet to promote and educate the concept of AI. This succeeded – we got a lot of requests for more information. So we added more to Intranet to inform our lawyers (and indirectly our clients). Some of this was driven by client RFPs, some of which ask about specific AI uses cases. We’ve seen exponential growth in AI.

Julian / Google: From the client perspective, we don’t care about AI. We want to see efficiency gains. How is irrelevant.

Amy / Perkins: We started with a use cases and looked at products and selected ones (Kira for doc review and Neota Logic for service delivery). A mix of addressing business needs and understanding tool capability and finding new uses cases. Addressing Andrew’s question about pros and cons of being an earlier adopter. A con is constantly needing to address misconceptions (many of which derive from the hype). A pro is that one can get creative with the tools. Many tools have a lot of capability and they many not solve the entire problems but can get you more than 505 of the way. Starting early provides a leg up on designing new, efficiency-enhancing solutions. Another pro is establishing a good relationship with providers, which means access and input to roadmap.

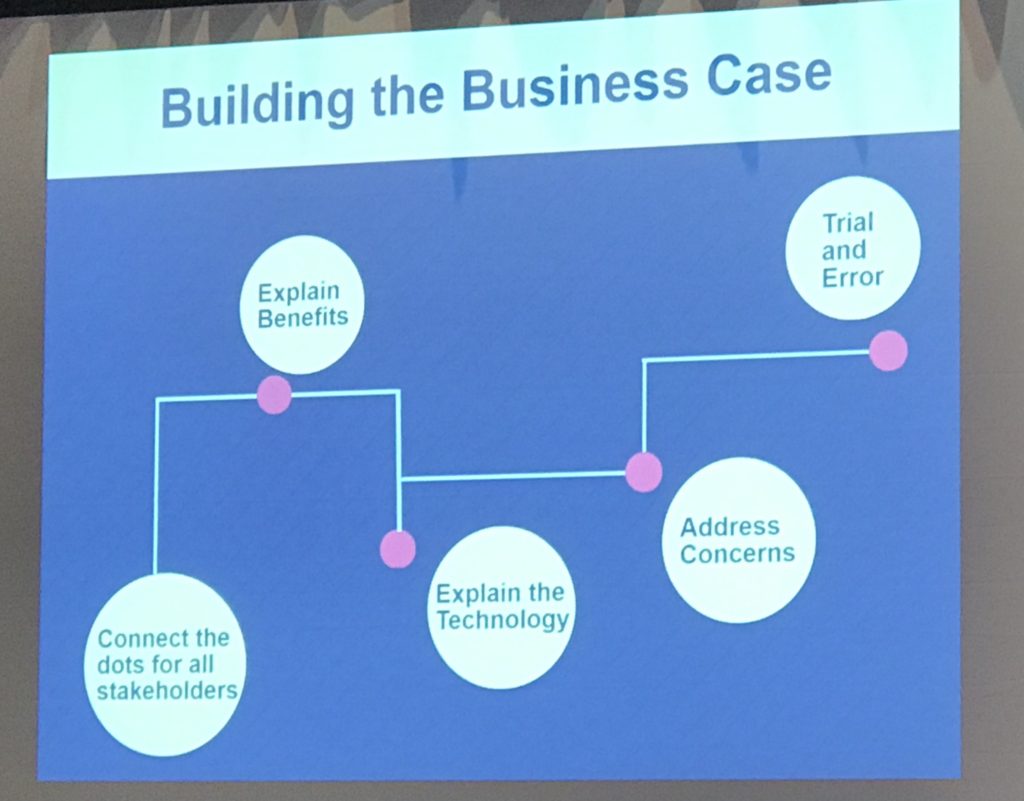

Building The Business Case

Anna / W&C: In narrow uses cases, it’s easier to show the potential. But for platform solutions, which can do a lot, building a biz case is harder. For those broader solutions, we now are starting with narrower uses so that we can build a business case. A big element of a business case is understanding how a solution will change how lawyers work. The change also includes explaining how the systems work.

Amy / Perkins: In a due diligence, we can now surface 1000s of data points in seconds. This can be overwhelming for lawyers. Then they ask what else it does. But it’s key for them to focus on the fast delivery of so many provisions for review.

Jonathan / DLA: In due diligence, clients may start with a narrow request of what they want to review. Then they expand the number of clauses. Now, we don’t have to do a second pass review; we can just go back to the system output.

Julien / Google: Google has stated it wants to be an AI-first company. So it’s pretty easy for me to build the AI case in the law department. But the focus needs to be on solving business problems. We did a test of our machine learning versus lawyers on classification in a patent recall. We collected the data, analyzed it, and the results are compelling to make the case for change.

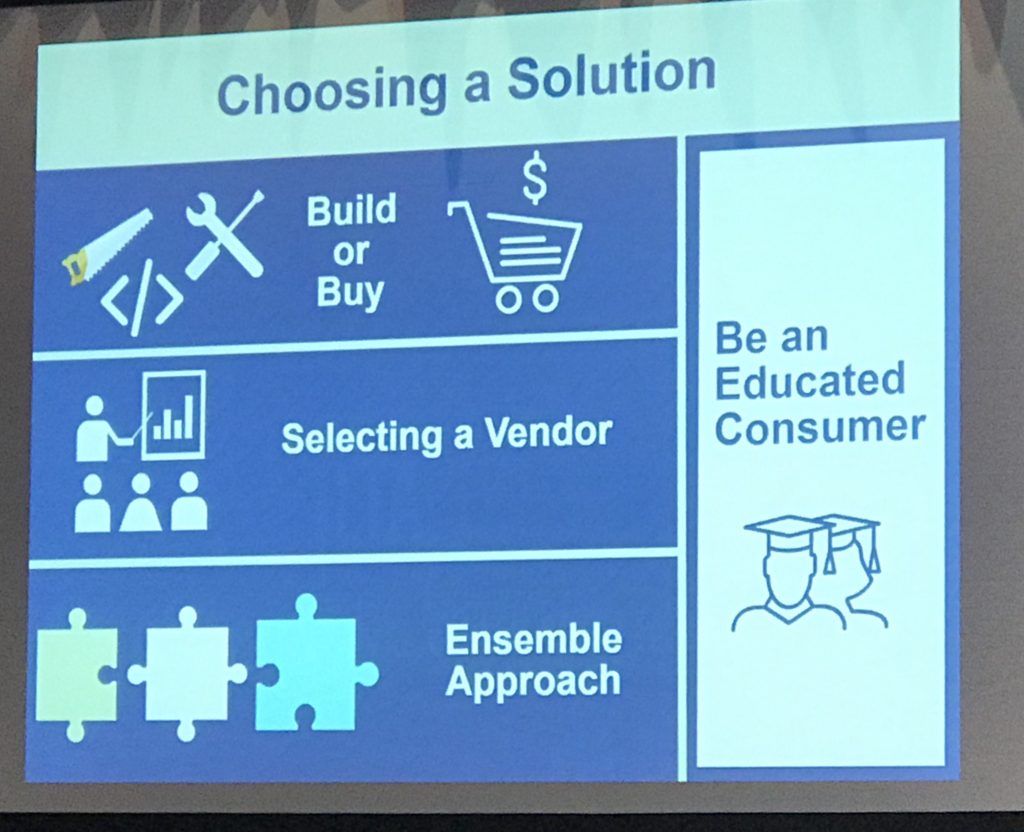

Choosing a Solution, Especially Build versus Buy

Julien / Google . Implementation of machine learning or other AI is not very different than any other software. You need a business case, then find and evaluate software. You go through a build / buy analysis. When you go to market, you do a vendor evaluation. While it’s easier for Google to consider building, all buyers should think about the possible build path because that helps force you to think through what you need. It’s a way to help build the use case and problem solution. Once you talk to vendors, you will need to have a deep dialog – having gone through the build analysis, you will be in a better position to ask the right questions. Mentions that LexPredict has open sourced its machine learning ContraxSuite tool.

Amy / Perkins: If you roll out AI tools, you become the subject matter expertise. This allows you to dispel myths lawyers have. Marketing will come to you to help answer RFPs or develop materials. You don’t have to become a machine learning expert but you do need to develop expertise. On build versus buy, you have to find out if your firm uses other open source tools. And evaluate the options on the market.

Jonathan / DLA: Buy what we can, build what we must. On choosing a solution, think about how it fits in a lawyer’s workflow. What does the UI look like – lawyers have to be able to learn it easily.

Amy / Perkins: Consider which tools integrate. We use Kira. It integrates with Neota. And with High Q.

Anna / W&C: On build v buy. We have an associate who built his own proof reading tool. Tap these types of resources.

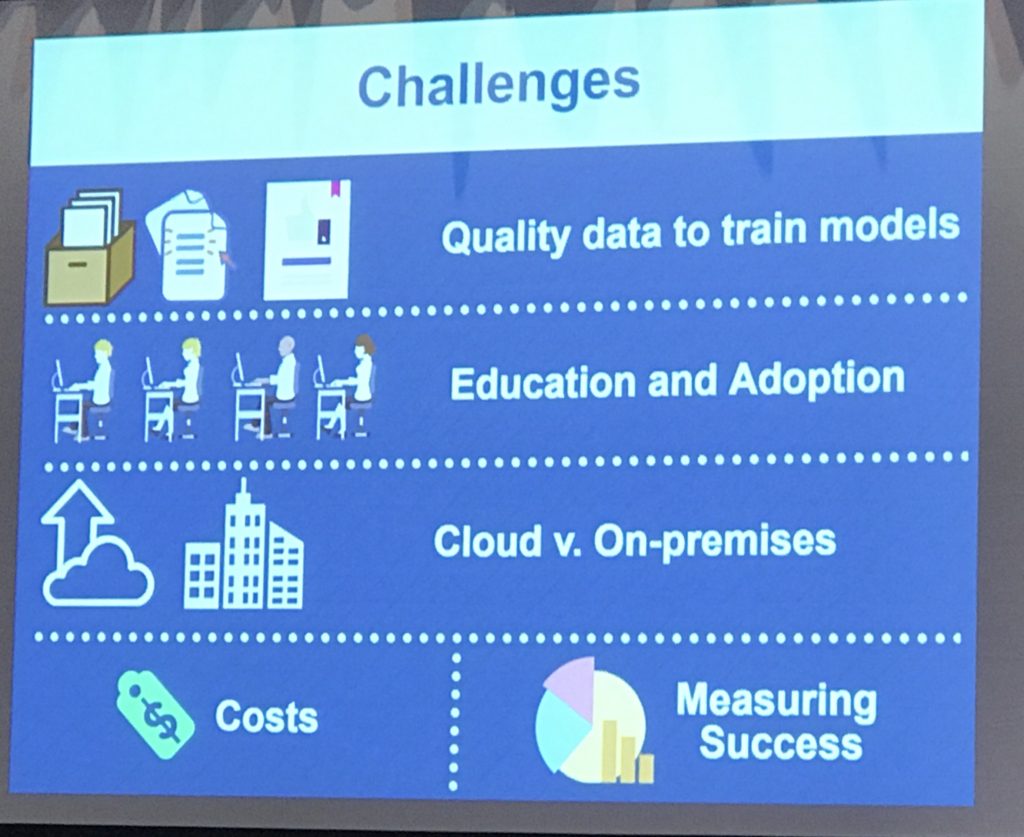

Challenges

Amy / Perkins: Challenges include: First, if you train your own models (as we do in Kira), you need quality data at high enough volumes. Law firms sit on mountains of data but a lot of it is not good. It was hard to build models because we did not have enough good data. Sometimes, we have a lot of good models but we may either have too little or too much variation across models to be good for training. Other challenges: Managing expectations. Change management. Customizing training to different user classes.

Anna / W&C: Having the right staff and skills in-house and/ or bandwidth of those who have them already. And you can’t go to LinkedIn to find new people so easily because the skills are so new.

Jonathan / DLA: Agrees with above. Finding resources can be hard if you don’t have staff because everyone already has day jobs.

Julien / Google: Getting quality training data in-house is a big challenge. We work with Seal Software. We did not have enough training data and had to outsource classifying a couple thousand patents to a law firm to develop the training data set.

Lessons Learned and Best Practices

Amy / Perkins. Education is key. You have to educate your lawyers and clients on what AI tools can do. You have to be the SME. For pilots, you need to find the right type of partners who have the need and can cope with fact that not all pilots will work. You need to understand their motivations and personalities to select the right partners to be champions. Find clients with the right mindset to participate in testing. Don’t unleash new tools too widely to start. Start small, build the right framework and staffing so you are ready for broader roll out.

Jonathan / DLA: Don’t underestimate the amount of training – and how tailored it has to be – to grad lawyer attention and buy-in. You have to be ready for just-in-time training for when a need arises quickly. Be prepared for that.

Anna / W&C: Start small and start now. Upon initial reading, it seemed daunting. But once you start, it’s easier. Make sure to work with the right stakeholders – IT, GC, others – and keep bringing them along. Have a governance process.

Future Plans for AI

Jonathan / DLA: I’ve talked a lot about due diligence. We’ve trained 800 lawyers and worked on hundreds of deals. But we have much more we want to do: KM, clause banks, foreign languages. We need to find champions per new use. For example, in capital markets, we will work with partners to focus on specific, important clauses. We asked one client for a sample set of documents; we highlighted clauses of interest. Then applied to 100 docs and work went very quickly.

Amy / Perkins: Use extracted deal terms for business intelligence (on the practice side – to know what’s market, or what parties have agreed to in past). Looking at intersection of AI with other tech.

Julien / Google: I’m excited about doing more with expert systems. It’s a way to automate a lot of work we do. Excited about prediction of settlements and outcomes. It’s not a complicated AI problem, it’s a data challenge. We don’t have enough data to predict litigation. We may need to pool litigation results across companies in an anonymized way.

Anna / W&C: We will move from point solutions to enterprise solutions that become part of our broader KM program and take it deeper into the business of law.

Is AI Really Different?

Anna / W&C: We instinctively know it’s important. Data will drive new ways of work. There’s a big shift about to happen. It will significantly change how people do work.

Julien / Google: I focus on solution implementation. I focus on finding problems and making our lawyers more efficient. “I’ve had it with the hype on AI in legal. It’s software, it’s complicated. But it’s software”

Amy / Perkins: Long term, machines will be more accurate than lawyer. We will have more time for qualitative conversations with lawyers. AI will help surface more and better content for KM (we won’t have to rely on lawyers telling us what is valuable).

Jonathan / DLA: AI is software but something big is going on here.

—-

Q&A not captured. Posted at 12:15 PDT.

Session Description

Artificial Intelligence (AI) and other cognitive technology tools are appearing more frequently in the legal space, often with much fanfare. But how is AI being used to leverage data, automate legal work, reduce costs and enhance efficiencies? Participate in this robust discussion to examine what legal AI is, what AI technology can deliver today, how AI is transforming the legal profession and what the future may hold for legal AI and the automation of legal advice. Setting up governance, training, and roll-out plans.

This is part 1 of 3 leading-edge sessions on AI.

Archives

Blog Categories

- Alternative Legal Provider (44)

- Artificial Intelligence (AI) (57)

- Bar Regulation (13)

- Best Practices (39)

- Big Data and Data Science (14)

- Blockchain (10)

- Bloomberg Biz of Law Summit – Live (6)

- Business Intelligence (21)

- Contract Management (21)

- Cool Legal Conferences (13)

- COVID-19 (11)

- Design (5)

- Do Less Law (40)

- eDiscovery and Litigation Support (165)

- Experience Management (12)

- Extranets (11)

- General (194)

- Innovation and Change Management (188)

- Interesting Technology (105)

- Knowledge Management (229)

- Law Department Management (20)

- Law Departments / Client Service (120)

- Law Factory v. Bet the Farm (30)

- Law Firm Service Delivery (128)

- Law Firm Staffing (27)

- Law Libraries (6)

- Legal market survey featured (6)

- Legal Process Improvement (27)

- Legal Project Management (26)

- Legal Secretaries – Their Future (17)

- Legal Tech Start-Ups (18)

- Litigation Finance (5)

- Low Cost Law Firm Centers (22)

- Management and Technology (179)

- Notices re this Blog (10)

- Online Legal Services (64)

- Outsourcing (141)

- Personal Productivity (40)

- Roundup (58)

- Structure of Legal Business (2)

- Supplier News (13)

- Visual Intelligence (14)